Security in the Vibe Code Era, Part 2: Policy Automation and the Competitive Dynamic

Posted by: Victor Wieczorek

In part 1 of this two part series, we explored the idea of vibe code and discussed how security can become a partner to enable secure vibe coding practices. In part 2, we’re going to dive into how security and business units can capitalize on the competitive dynamic between vibe coders, and provide pointed examples of how policy automation for vibe coding can drive secure innovation without introducing unnecessary roadblocks.

Policy Automation and Vibe Coding: Which Ideas Move Forward?

Here’s what I’ve seen in practice:

You probably have multiple people in your organization who are different degrees of vibe coders. Some are identifying problems and tackling them with AI assistance.

Then you realize: other people are solving similar problems.

It becomes a race condition. An internal competition. You may even want that. It’s a proven method for bubbling the best ideas to the top, and it’s natural to want the best solution to surface.

But most vibe-coded tools never surface for formal evaluation. They live and die in the shadows. The output they generate looks like the product of manual work. No one asks how it got done.

Visibility Happens at Inflection Points

Vibe code stays hidden until something needs to scale, or when something breaks. When someone wants credit. Or, optimally, when the culture invites disclosure.

And at those inflection points, the culture you’ve built determines what happens next.

In Culture A (compliance is invisible), the person who surfaces their work hits a wall. They didn’t know about the requirements. They have to go back and retrofit. Their momentum stalls. Maybe their idea dies.

In Culture B (compliance is baked in), the person who surfaces their work is already prepared. They had the wherewithal to learn and understand the policies, find the approved tools, know what adjustments were needed. Their AI helped them think ahead. They show up ready to collaborate.

Consider three people who all built similar solutions:

| Person A | Person B | Person C |

| No documentation | Basic documentation | Full documentation |

| No security consideration | “I think it’s secure” | AI already checked policies |

| No compliance artifacts | Manual compliance check | SBOMs, licensing, supply chain pre-done |

| Friction at the inflection point | Some friction | Smooth path forward |

Person C’s idea moves forward. Not because they’re a better coder. They may not be a coder at all. But their idea gains traction because they operated in a culture where guidance was available, and they had the initiative and knowledge to use it.

This isn’t about gatekeeping. (Remember, there is no gate). It’s about what happens when work that was invisible becomes visible, and whether that moment is collaborative or adversarial.

The People Who Win are the Ones Who Think Ahead

The cultures that win are the ones that make thinking ahead easy.

Policy Automation as an Administrative Advantage

If I come to the table with a proposal that looks similar to my neighbor’s (maybe theirs is even slightly better coded) and I have:

- Policy compliance? Documented.

- Security considerations? Addressed.

- Approvals? Lined up.

- Supply chain validation? Done.

- Licensing checks? Complete.

- Administrative boxes? Checked.

…because my agents knew about these requirements and thought ahead and made it happen?

My ideas are more likely to move forward. Not because I out-competed anyone, but because I tried to show up ready to collaborate.

That’s the difference between fighting for approval and earning trust. Automating policies and making them findable becomes the secret sauce that sets vibe coders up for success. Because the policies are findable, understood, and easily integrated into the vibe coding process, the collaboration is baked in from the beginning.

Setting Realistic Expectations

Policy automation doesn’t turn a vibe coder into a software developer. Their AI-assisted compliance work isn’t going to be perfect. It won’t match what a trained, professional developer would produce. The Software Bill of Materials (SBOM) might be incomplete. The security considerations might miss edge cases. The documentation might have gaps.

But even 10% is better than zero.

Just the acknowledgment that there’s work to be done, that downstream stakeholders exist and have requirements, is a massive shift from where we are today.

Think about what happens without a security mindset. A developer — or now, a vibe coder — runs face-first into security without understanding what’s required. They didn’t know there was a review process. They didn’t know about the compliance requirements.

When vibe coders get blocked outright, they get frustrated. Silos form, disenchantment brews, bad relationships calcify. The security team becomes the enemy instead of a partner.

But if you reframe expectations upfront, for yourself as a vibe coder, and for the security org enabling you:

- You’re not going to slam dunk every time. That’s fine.

- You can spend some time thinking ahead about what’s required.

- You can go find that information, or ask your AI to find it.

- You can feed that context to your AI and make meaningful progress, even if it’s fractional.

And that fractional progress? It matters.

Security isn’t just about technical controls; it’s about trust, collaboration and working relationships.

The person who shows up having thought about the downstream implications, even imperfectly, is someone people want to work with.

You can already see this playing out. The people, the teams, the departments who are thinking ahead? They’re already more successful.

Not because they’re more skilled, but because they’re more prepared.

They’ve reduced friction. They’ve built trust.

Making Policy Automation Accessible

When the information is out there, the AI can help you find it, understand it, and apply it. All it takes is the intention to look. Bonus points if you provide vibe coding tools that are pre-trained to apply your policies.

For security orgs: this is why making your guidance findable matters so much. You’re not just publishing policies. You’re creating the conditions for people to succeed. You’re making it easy to do the right thing.

Honest Acknowledgment – Nothing is Perfect

Some tools may never surface. They’ll run in the shadows forever, never needing to scale, never breaking, never getting caught. The enabler model doesn’t eliminate shadow AI.

But it does two things:

- It improves the odds that what does surface is in better shape. Less cleanup, more collaboration.

- It increases the likelihood that things surface at all. When the path forward is less adversarial, people are more willing to come out of the shadows.

Organizations that want to go further can offer hands-on training. Security team members with development experience are uniquely positioned to coach vibe coders on what to look for and why it matters. They speak the language. The alternative is relying solely on controls that, for many organizations, aren’t comprehensive enough to catch everything. Enablement doesn’t replace controls. It covers the gaps.

What Policy Automation Looks Like in Practice

Remember: We’re talking about non-coders using AI here. Policy automation files for vibe code can – and should – be readable, understandable, and straight-forward. These documents are not formal policy-as-code or compliance automation frameworks. That type of policy automation is reserved for the inner ring (i.e., DevSecOps). This is simpler:

Step 1: Publish Machine-readable Policies with Organizational Context

Example: Security Context File

# Security Guidelines for AI-Assisted DevelopmentWhen building any tool or automation:## Authentication & Authorization

- All endpoints that access data must require authentication

- Never hardcode credentials. Use environment variables

- If you need an API key, request one through [link]## Data Handling

- Don't store PII in local files

- If you're processing customer data, check with [team] first

- Logs should never contain passwords or tokens## Before You Deploy

- Run it by [security contact] if it touches production data

- If it calls external APIs, document which ones

- If you're not sure, ask. We'd rather help early than clean up later## Getting Help

- Slack: #security-help

- Office hours: Tuesdays 2-3pm

- Quick questions: [email]That’s it. Plain text. Findable. The AI assistant helping the vibe coder build can read it and apply it.

Step 2: Shift from Review to Guidance

| Gatekeeper Approach | Enabler Approach |

| “Submit for security review” | “Here’s what to think about as you build” |

| “Blocked pending approval” | “Let us know if you need help with this part” |

| “Your code failed our scan” | “Common issues we see, and how to avoid them” |

| Reactive | Proactive |

| After the fact | Before the first line |

Step 3: Create the Conditions for Pre-compliance

The goal is that by the time the vibe coder finishes building, they’ve already:

- Thought enough about security to point their AI at the guidance docs

- Avoided the common pitfalls because the guidance was in context

- Documented what they needed to because the template was there

- Reached out early if something was unusual

Not perfect compliance. Maybe 30%, maybe 60%, maybe 80% depending on the domain. But meaningfully better than zero, which is what you get when you’re invisible to them. And it builds the relationship that will make all future initiatives easier.

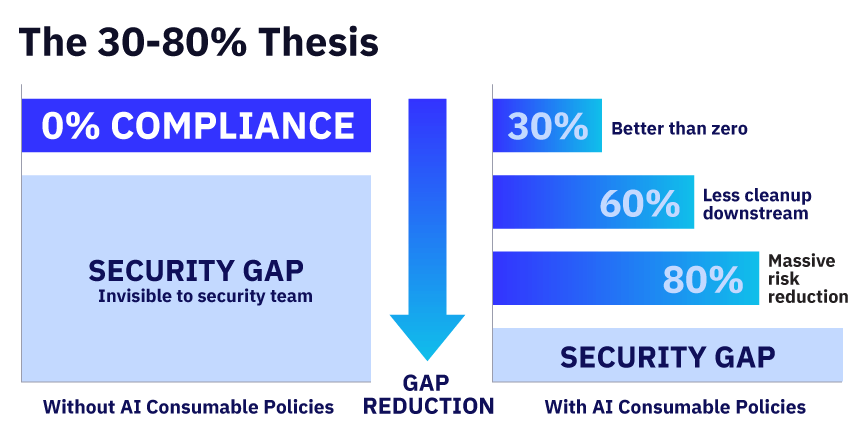

The 30-80% Thesis

We don’t need perfection. Aiming for 100% compliance will set everyone up for failure. Policy automation for vibe coding is not about re-creating DevSecOps in the outer ring. It simply introduces security at a fundamental level so that you reduce risk while enabling innovation.

Even partial automation of compliance activities delivers disproportionate value:

| If the AI helps them get… | That’s still… |

| 30% of security considerations | Better than 0% |

| 60% of documentation done | Less cleanup downstream |

| 80% of common pitfalls avoided | A massive reduction in rework – and risk |

The vibe coder isn’t going to become a security expert. They’re not even going to become a developer. Their AI assistant isn’t going to replace your security review. But if the AI surfaces the right questions at the right time, the delta is enormous.

You’re not trying to make them perfect. You’re trying to shrink the gap — so when you do get involved, it’s collaboration, not cleanup. And most importantly, it’s not damage control.

A Call to Action

If you’re in a security organization, here’s the reality: vibe coding is already happening. The gatekeeper model assumes there’s a gate. For the outer ring, there isn’t one.

The question isn’t whether vibe coders will build things. They already are. The question is whether your security guidance is in the room when they start building, or whether you find out about it later.

If you’re looking to make your security policies work for AI-assisted development, GuidePoint’s AI Governance Services can help. We work with security teams to make compliance findable, usable, and built into the process from the start.

Victor Wieczorek

VP, AppSec and Threat & Attack Simulation,

GuidePoint Security

Victor Wieczorek drives offensive security innovation at GuidePoint Security, leading three professional services practices alongside the operational teams behind that work. This creates a feedback loop that makes delivery better for everyone. His practices (Application Security, Threat & Attack Simulation, and Operational Technology) cover the full offensive spectrum: secure code review, threat modeling, and DevSecOps programs; red and purple team assessments, penetration testing, breach simulation, and social engineering; OT risk assessments, framework alignment, and critical infrastructure security.

Before GuidePoint, Wieczorek designed secure architectures for federal agencies at MITRE and led security assessments at Protiviti. He holds OSCE and OSCP, and built depth in governance and compliance (previously held CISSP, CISA, PCI QSA) to bridge offensive work with risk communication. His teams operate with a clear philosophy: enable clients to be self-sufficient. That means detailed reproduction steps with real commands, no proprietary tooling that obscures findings, and deliverables designed so organizations can act without dependency. Under his leadership, GuidePoint achieved CREST accreditation and he was named to CRN's 2023 Next-Gen Solution Provider Leaders list.

His current focus reflects where the industry is heading. As AI agents move into production, both as threats and as security tools, Wieczorek has been thinking through what governance looks like for autonomous systems. His view: the more capable the technology, the more essential human accountability becomes. He speaks on this through various webinar series, industry podcasts, and annual conferences.